Last week on Wednesday, 9 November, Professor Alex Weinreb gave a fascinating talk to an audience of graduate students and professors from the Department of Sociology here at UT Austin.

Professor Weinreb’s talk, entitled “Screw the Models, get back to the data: Or, on the disciplinary dangers of data ex nihilo” comes out of research he has been doing for his current book project on the mis-measurement of society.

The basic premise of Weinreb’s talk, which has potentially earth-shattering implications for the positivistic social sciences, is that there are manifold errors at the basic level of data collection, particularly in the third world, which may lead to mistaken results in quantitative studies that rely on survey-based research.

Weinreb contends that the past four or five decades of quantitative research have witnessed an impressive growth in the complexity of statistical modeling techniques, and in particular post-facto techniques for “data cleaning” that attempt to fix problems in survey data prior to analysis. Yet, since the 1950s, there has been little social science research aimed at assessing survey errors in the third world and finding better ways to get more accurate data.

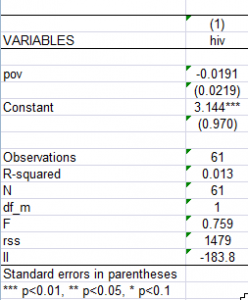

He gave several shocking examples of how survey research has missed big conclusions due to data issues, despite fancy models. First, heavy-weight demographers in the 1980s completely missed the process of African fertility transitions, which was happening as they wrote, because their data were not adequate to the task they proposed. Second, a multi-million dollar cross-national survey of several Asian countries in the 1990s was unable to find any significant results regarding women’s autonomy, which contrary to theoretical assumptions seemed to be greater in patrilineal and patriarchal areas than in others.

In sum, whether social scientists miss something big, or are looking for something big and can’t measure it, the answers to both of these errors lie in the data, rather than in more complex models. And the essence of these errors lie in “non-sampling error”. In other words, even where sampling is perfectly random, or adjusted with appropriate weights, a number of other errors continue to creep into datasets. The shocking thing is that non-sampling error accounts for the majority of variance in most data.

Some types of errors that are commonly seen in third world surveys include variations in results due to translation issues, insider-outsider dynamics, male-female interviewer-interviewee dynamics, and privacy issues (whether or not a third party is present during the interview). Some of Weinreb’s recent work in the Dominican Republic has shown the variation in results when interviewers know the respondent personally, are an unknown community insider, or are an outsider to the community. In one illustrative example, respondents were much more likely to falsely claim to know a fictitious person among a list of real personnages when interviewed by an outsider, something Anthropologists and ethnographers call “sucker bias.” But the problem is that the directionality of any such bias is not consistent. On one set of questions respondents may be more likely to give accurate answers to outsiders than insiders (or to men rather than women), and vice versa for another set. And in another country or part of the same country, or even among different gender and age groups, this situation may be completely different.

There are clearly no easy solutions for these data dilemmas. But further research into data collection methods is one key to improving results. Although there have been advances in data collection methods in the developed world, with regards to the third world, this type of research has stalled since the 1950s and 1960s after World Fertility Surveys in the 1970s, and later Demographic & Health Surveys from the 1980s until today became the nearly universal gold standard for survey research. Having one such standard method for survey research does have the benefit of comparability across place and time. However, it seems to have serious problems of accuracy since it does not get the best results in all contexts. In other words, the current position of the social science academy is to privilege reliability of data over validity for a number of reasons, including comparability and tradition.

Following his talk, a number of graduate students and professors engaged in a lively discussion with Weinreb, parsing out the details of some of his broader brush strokes, and debating the pro and contra of some of his hypotheses. Department Chair and Professor Christine Williams pointed out that many of these data dilemmas were critiques often leveled by qualitative researchers at quantitative research in general, but noted that it was important to see this type of nuanced discussion from within a quantitative framework, since it is essential to always improve all of our research methods. Professor Pam Paxton, alluded to the conversation from the week before at the brown bag presentations of graduate students Amanda Stevenson and Isaac Sasson, pointing out that there are a number of solutions to data problems, even issues of non-sampling error, if researchers take the time to thoroughly diagnose problems and deal with them. She also pointed out that there is an accountability mechanism built into this type of survey research in the form of policies and programs, which are often informed by such social science research and ultimately prove successful or unsuccessful.

Isaac Sasson turned the discussion toward the future of the field and wondered aloud about how these ideas affect the big picture of the knowledge of structure and the structure of knowledge. And graduate student Marcos Perez ruminated about interdisciplinary linkages and the ways in which some of these issues could be solved through collaboration with colleagues in other departments.

Although we don’t yet have the answers to a number of these questions, Professor Weinreb’s work did shed some light on the problems of data assumptions ex nihilo (out of nothing), which ignore non-sampling error. Given the entrenched nature of quantitative research traditions, this is likely to be but a quiet revolution in the social sciences in the immediate future, but a revolution nonetheless, and one to keep our eyes on.